- David Wessel’s IRCAM

- Organized Entanglement: Fiber and Textile Arts, Science and Engineering

- Managing Complexity with Explicit Mapping of Gestures to Sound Control with OSC

- Notations for Performative Electronics: the case of the CMOS Varactor

- A Synthesizable Hybrid VCO using SkyWater 130nm Standard-Cell Multiplexers

- A Recipe using OSC Messages

- Birds of the East Bay 2020

- Exercising the odot language in Graphics Animation Applications

- C++ container output stream header file

- Programming

Foundational

The Paper FingerPhone, a sustainably designed electronic Musical Instrument

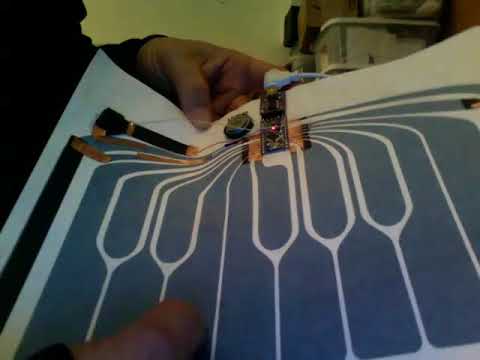

Fri, 01/26/2018 - 15:00 — AdrianFreedThe next version used a pizza box as the resonator to demonstrate reuse as the sustainability aspect was emphasized..

Eventually it was placed on cardboard to be attached to poster board where it won "Best Poster" award at the NIME conference.

The conference paper associated with that poster presentation was chosen for inclusion in the NIME reader.

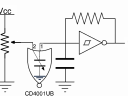

Notations for Performative Electronics: the case of the CMOS Varactor

Mon, 03/08/2021 - 14:18 — AdrianFreedSummary

Demonstrating an unusual application of a CMOS NOR gate to implement a CMOS varactor controlled VCO, I explore the idea of circuit schematics as a music notation and how scholars and practitioners might create and analyze notations as part of the rich web of interactions that constitute current music practice.

Machine Learning and AI at CNMAT

Fri, 07/24/2020 - 14:27 — AdrianFreed- AdrianFreed's blog

- Login or register to post comments

- Read more

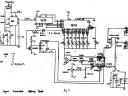

Analog and Digital Sound Synthesis as Relaxation Oscillators

Thu, 04/11/2019 - 16:40 — AdrianFreedThe following pages provide context for the workshop and include links to contributions from my co-teacher, Martin De Bie. This includes pointers to various iterations of his 555 timer-based Textilo project.

This project will be expanded ongoingly with further relaxation oscillator schemes.

Publications (Bibliography) Workflow 2018

Mon, 01/29/2018 - 14:04 — AdrianFreed- AdrianFreed's blog

- Login or register to post comments

- Read more

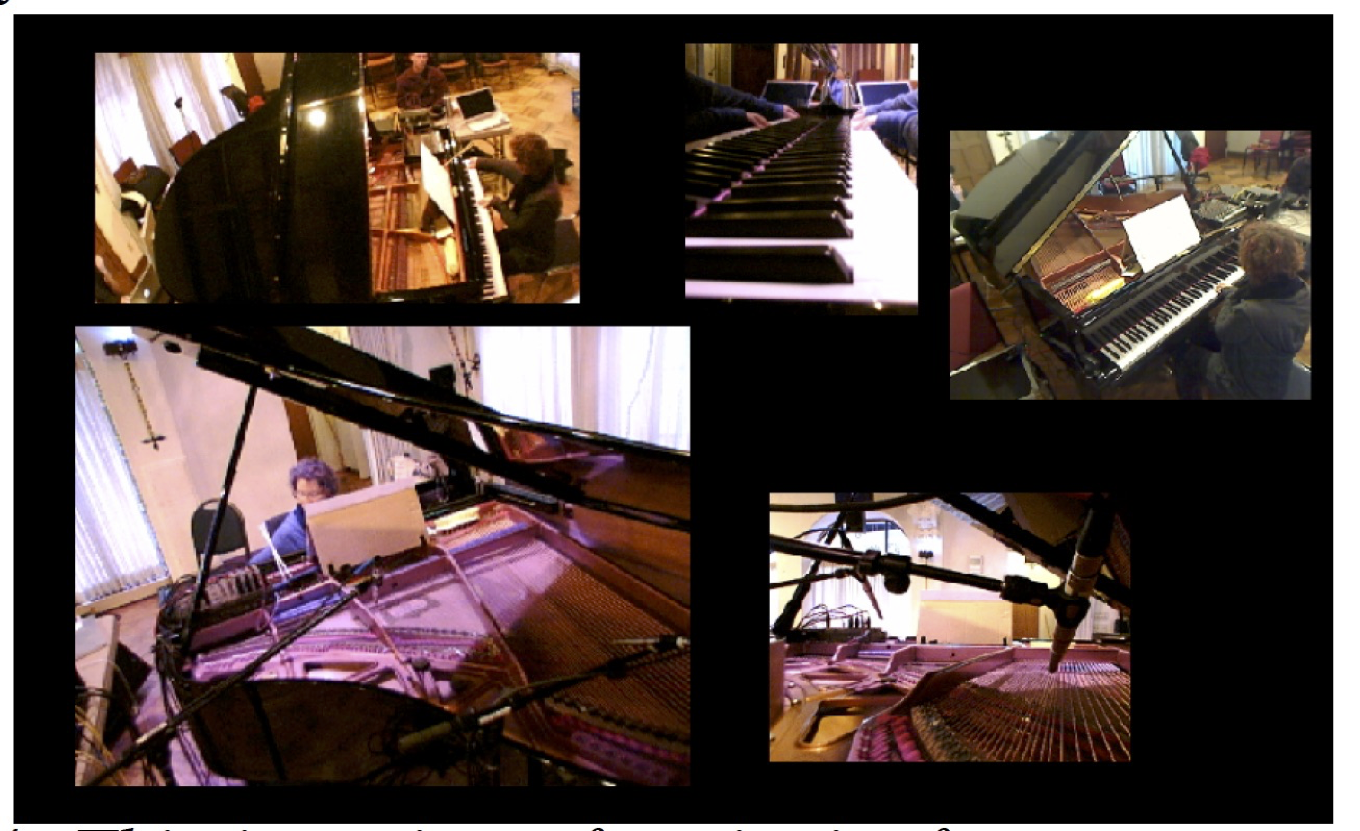

Pervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salience Analysis

Mon, 01/15/2018 - 14:38 — AdrianFreedPervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salients Analysis

Abstract. This paper describes situations which lend themselves to the use of numerous cameras and techniques for displaying many camera streams simultaneously. The focus here is on the role of the “director” in sorting out the onscreen content in an artistic and understandable manner. We describe techniques which we have employed in a system designed for capturing and displaying many video feeds incorporating automated and high level control of the composition on the screen.

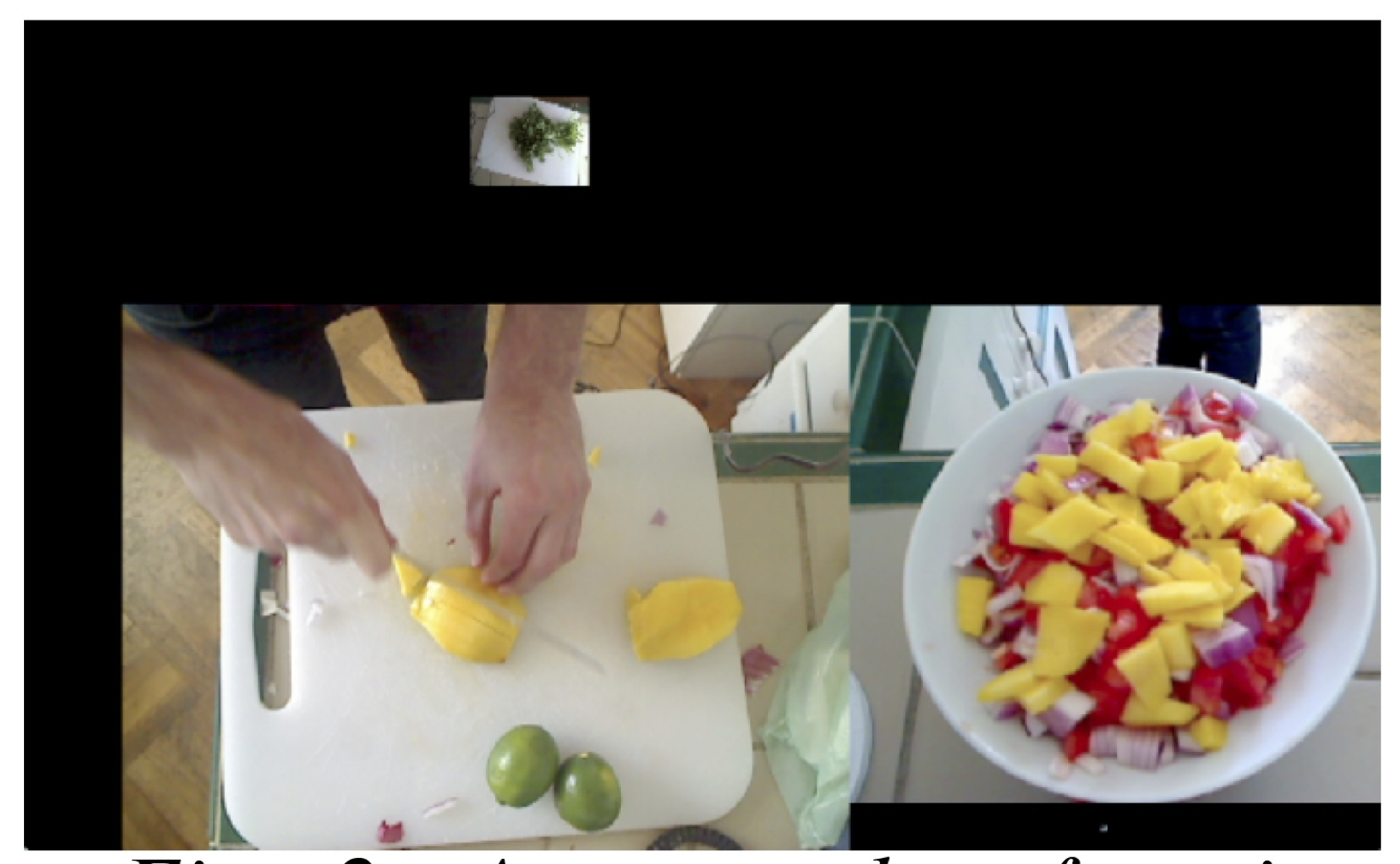

1 A Hypothetical Cooking Show

A typical television cooking show might include a number of cameras capturing the action and techniques on stage from a variety of angles. If the TV chef were making salsa for example, cameras and crew might be setup by the sink to get a vegetable washing scene, over the cutting board to capture the cutting technique, next to a mixing bowl to show the finished salsa, and a few varying wider angle shots which can focus on the chef's face while he or she explains the process or on the kitchen scene as a whole. The director of such a show would then choose the series of angles that best visually describe the process and technique of making salsa. Now imagine trying to make a live cooking show presentation of the same recipe by oneself. Instead of a team of camera people, the prospective chef could simply setup a bunch of high quality and inexpensive webcams in these key positions in one's own kitchen to capture your process and banter from many angles. Along with the role of star, one must also act as director and compose these many angles into a cohesive and understandable narrative for the live audience.

Here we describe techniques for displaying many simultaneous video streams using automated and high level control of the cameras' positions and frame sizes on the screen allowing a solo director to compose many angles quickly, easily, and artistically.

2 Collage of Viewpoints

Cutting between multiple view points would possibly obscure one important aspect in favor of another. In the cooking show example, a picture in picture approach would allow the display of both a closeup of a tomato being chopped and the face of the chef describing his or her cutting technique. The multi angle approach is a powerful technique for understanding all of the action being captured in a scene as well as artistically compelling and greatly simplifies the work of the director of such a scene. The multi-camera setup lends itself to a collage of framed video streams on a single screen (fig. 1 shows an example of this multi-angle approach). We would like to avoid a security camera style display that would leave much of the screen filled with unimportant content, like a view of a sink not being used by anyone.

Figure 1: A shot focusing on the cilantro being washed

We focus attention on a single frame by making it larger than the surrounding frames or moving it closer to the center. The system is designed to take in a number of scaling factors (user-defined and automated) which multiply together to determine the final frame size and position. The user-defined scaling factor is set with a fader on each of the video channels. This is useful for setting initial level and biasing one frame over another in the overall mix. Setting and adjusting separate channels can be time consuming for the user, so we give a higher level of control to the user that facilitates scaling many video streams at once smoothly and quickly. CNMAT's (Center for New Music and Audio Technologies) rbfi (radial basis function interpolation) object for Max/MSP/Jitter allows the user to easily switch between preset arrangements, and also explore the infinite gradients in between these defined presets using interpolation[1]. rbfi lets the user place data points (presets in this case) anywhere in a 2 dimensional space and explore that space with a cursor. The weight of each preset is a power function of the distance from the cursor. For example, one preset point might enlarge all of the video frames on particular positions in the rooms, say by the kitchen island, so as the chef walks over the kitchen island, all of the cameras near the island enlarge and all of the other cameras shrink. Another preset might bias cameras focused on fruits. With rbfi, the user can slider the cursor to whichever preset best fits the current situation. With this simple, yet powerful high level of control, a user is able to compose the scene quickly and artistically, even while chopping onions.

Figure 2: A screenshot focusing

on the mango being chopped and

the mixing bowl

3 Automating Control

Another technique we employ is to automate the direction of the scene by analyzing the content of the individual frame and then resizing to maximize the area of the frame containing the most salient features. This approach makes for fluid and dynamic screen content which focuses on the action in the scene without any person needing to operate the controls. One such analysis measures the amount of motion which is quantified by taking a running average of the number of changed pixels between successive frames in one video stream. The most dynamic video stream would have the largest motion scaling factor while the others shrink from their relative lack of motion. Another analysis is detecting faces using the openCV library and then promoting video streams with faces in them. The multiplied combination of user-defined and automated weight adjustment determines each frames final size on the screen. Using these two automations with the cooking show example, if the chef looks up at the camera and starts chopping a tomato, the video streams that contain the chef's face and the tomato being chopped would be promoted to the largest frames in the scene while the other less important frames shrink to accommodate the two.

Figure 3: A screenshot of the

finished product and some

cleanup

Figure 3: A screenshot of the

finished product and some

cleanup

Positioning on the screen is also automated in this system. The frames are able to float anywhere on and off the screen while edge detection ensures that no frames overlap. The user sets the amount of a few different forces that are applied to the positions of the frames on the screen. One force propels all of the frame towards the center of the screen. Another force pushes the frames to rearrang their relative positions on the screen. No single influence dictates the exact positioning or size of any video frame; this is only determined by the complex interaction of all of these scaling factors and forces.

4 Telematic Example

Aside from a hypothetical self-made cooking show, a tested application of these techniques is in a telematic concert situation. The extensive use of webcams on the stage works well in a colocation concert where the audience might be in a remote location from the performers. Many angles on one scene gives the audience more of a tele-immersive experience. Audiences can also experience fine details like a performer's playing subtly inside the piano or a bassist's intricate fretboard work without having to be at the location or seated far from the stage. The potential issue is sorting out all of these video streams without overwhelming the viewer with content. This can be achieved without a large crew of videographers at each site, but with a single director dynamically resizing and rearranging the frames based on feel or cues as well as analysis of the video stream's content.

Figure 4: This is a view of a pianist from many angles which

would giving an audience a good understanding of the room

and all of the player's techniques inside the piano and on the

keyboard.

References

1. Freed, A., MacCallum, J., Schmeder, A., Wessel, D.: Visualizations and Interaction Strategies for Hybridization Interfaces. New Instruments for Musical Expression (2010)

Pervasive Cameras: Making Sense of Many Angles using Radial Basis Function Interpolation and Salience Analysis, , Pervasive 2011, 12/06/2011, San Francisco, CA, (2011)The Paper FingerPhone: a case study of Musical Instrument Redesign for Sustainability

Mon, 01/15/2018 - 14:38 — AdrianFreedCommentary proposed for NIME Reader

The fingerphone is my creative response to three umbridges: the waste and unsustainability of musical instrument manufacturing practices, the prevailing absence of long historical research to underwrite claims of newness in NIME community projects, and the timbral poverty of the singular, strident sawtooth wavefrom of the Stylophone – the point of departure for the fingerphone instrument design.

This paper is the first at NIME of my ongoing provocations to the community to enlarge what we may mean by “new” and “musical expression”. I propose a change of scale away from solipsistic narratives of instrument builders and players to cummunitarian accounts that celebrate plural agencies and mediations [Born]. The opening gesture in this direction is a brief historicization of the fingerphone instrument. Avoiding the conventional trope of just differentiating this instrument from its immediate predecessors to establish “newness”, the fingerphone is enjoined to two rich instrumental traditions, the histories of which are still largely unwritten: stylus instruments, and electrosomatophones. The potential size of this iceberg is signaled by citing a rarely-cited musical stylus project from 1946 instead of the usually cited projects of the 1970’s, e.g. Xanakis’s UPIC or the Fairlight CMI lightpen.

Electrosomatophones are electronic sounding instruments that integrate people’s bodies into their circuits. They are clearly attested in depictions from the eighteenth century of Stephen Gray’s “flying boy” experiments and demonstrations, where a bell is rung by electrostatic forces produced by electric charges stored in the body of a boy suspended in silks. Electrosomatophones appear regularly in the historical record from this period to the present day.

The production of Lee De Forest’s audion tube is an important disruptive moment because the vacuum tube provided amplification and electrical isolation permitting loud sounds to be emitted from electrosomatophones without the pain of correspondingly large currents running through the performer’s body. While the theremin is the electrosomatophone that has gained the most social traction, massification of electrosomatophone use began with the integration of capacitive multitouch sensing into cellphones–an innovation prefigured by Bill Buxton [Buxton] and Bob Boie’s [radio drum] inventions of the mid-1980’s.

Achieving the sustainable design properties of the fingerphone required working against the grain of much NIME practice, especially the idea of separating controller, synthesizer and loudspeaker into their own enclosures. Such a separation might have been economical at a time when enclosures, connectors and cables were cheaper than the electronic components they house and connect but now the opposite is true. Instead of assembling a large number of cheap, specialized monofunctional components, the fingerphone uses a plurifuctional design approach where materials are chosen, shaped and interfaced to serve many functions concurrently. The paper components of the fingerphone serve as interacting surface, medium for inscription of fiducials, sounding board and substrate for the electronics. The first prototype fingerphone was built into a recycled pizza box. The version presented at the NIME conference was integrated into the poster used for the presentation itself. This continues a practice I initially using e-textiles for, the practice of choosing materials that give design freedoms of scale and shape instead of using rigid circuit boards and off-the-shelf sensors. This approach will continue to flourish and become more commonplace as printing techniques for organic semiconductors, sensors and batteries are massified.

The fingerphone has influenced work on printed loudspeakers by Jess Rowland and the sonification of compost by Noe Parker. Printed keyboards and speakers are now a standard application promoted by manufacturers of conductive and resistive inks.

In addition to providing builders with an interesting instrument design, I hope the fingerphone work will lead more instrument builders and players to explore the nascent field of critical organology, deepen discourse of axiological concerns in musical instrument design, and adduce early sustainability practices that ecomusicologists will be able to study.

The Paper FingerPhone: a case study of Musical Instrument Redesign for Sustainability

Adrian Freed

Introduction

Stylophone

The Stylophone is a portable electronic musical instrument that was commercialized in the 1970's and enjoyed a brief success primarily in the UK. This is largely attributable to its introduction on TV by Rolf Harris, its use in the song that launched David Bowie's career, "Space Oddity," and its appearance in a popular TV series "The Avengers". Three million instruments were sold by 1975. A generation later the product was relaunched. The artist "Little Boots" has prompted renewed interest in the product by showcasing it in her hit recording "Meddle".

Mottainai! (What a waste!)

The Stylophone in its current incarnation is wasteful in both its production and interaction design. The new edition has a surprisingly high parts count, material use and carbon footprint. The limited affordances of the instrument waste the efforts of most who try to learn to use it.

Musical toy designers evaluate their products according to MTTC (Mean Time to Closet), and by how many battery changes consumers perform before putting the instrument aside [1]. Some of these closeted instruments reemerge a generation later when "old" becomes the new "new"–but most are thrown away.

This paper addresses both aspects of this waste by exploring a rethinking and redesign of the Stylophone, embodied in a new instrument called the Fingerphone.

1.3 History

The Stylophone was not the first stylus-based musical instrument. Professor Robert Watson of the University of Texas built an “electric pencil” in 1948 [2]. The key elements for a wireless stylus instrument are also present in the David Grimes patent of 1931 [3] including conductive paper and signal synthesis from position-sensing potentiometers in the pivots of the arms of a pantograph. Wireless surface sensing like this wasn’t employed commercially until the GTCO Calcomp Interwrite’s Schoolpad of 1981.

Electronic

musical instruments like the Fingerphone with unencumbered surface interaction

were built as long ago as 1748 with the Denis d’Or of Václav Prokop

Diviš.

Interest in and development of such instruments continued with those of Elisha Gray

in the late 1800’s, Theremin in the early 1900’s, Eremeeff, Trautwein, Lertes,

Heller in the 1930’s, Le Caine in the 1950’s, Michel Waisvisz and Don Buchla in the

1960’s, Salvatori Martirano and the circuit benders in the 1970’s [4].

1.4 Contributions

The basic sensing principle, sound synthesis method and playing style of the Stylophone and Fingerphone are well known so the novel aspects of the work presented here are in the domain of the tools, materials, form and design methods with which these instruments are realized.

Contributions of the paper include: a complete musical instrument design that exploits the potential of paper sensors, a novel strip origami pressure sensor, surface e-field sensing without external passive components, a new manual layout to explore sliding finger gestures, and suggestions of how to integrate questions of sustainability and longevity into musical instrument design and construction.

2. The Fingerphone

2.1 Reduce

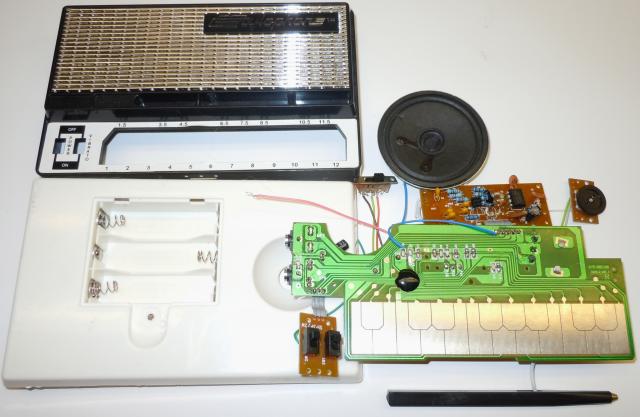

The Fingerphone (Figure 2) achieves low total material use, low energy cost and a small carbon footprint by using comparatively thin materials, recycled cellulose and carbon to implement the functions of the Stylophone without its high-energy cost and toxic materials: plastics, metals, glass fiber and resins.

Figure 2: The Fingerphone

The Stylophone contains two major, separate circuit boards with a different integrated circuit on each: one for the oscillator and stylus-board, the other for an LM386 power amplifier for the small speaker. The Fingerphone has only one integrated circuit, an Atmel 8-bit micro-controller, that is used to sense e-field touch and pressure on paper transducers, synthesize several digital oscillators and drive the sound transducer using an integrated pulse width modulation controller (PWM) as an energy-efficient, inductor-less class D amplifier.

The Fingerphone’s playing surface, switches and volume control functions are achieved using conductive paper [5, 6]. Various other materials were explored including embroidered silver plaited nylon thread (Figure 3), and a water-based silk-screened carbon-loaded ink (Figure 4).

Figure 3:

Embroidered Manual

Figure 4: Printed Manual

Paper is an interesting choice because cellulose, its core component, is the most common polymer, one that can be harvested sustainably and is also readily available as a recycled product.

Complete carbon footprint, and lifecycle cost analyses are notoriously hard to do well but we can use some simple measures as proxies: The Stylophone has 65 components, a production Fingerphone would have only six. Manufacturing process temperature is another useful proxy: the Stylophone’s metals, plastic and solder suggest a much higher cost than those associated with paper. At first glance it would appear that the waste stream from the paper of the Fingerphone might be more expensive than the Stylophone. In fact they are similar because of the packaging of the reels the surface mount parts are contained in during manufacturing of the Stylophone. The Fingerphone waste paper stream can be recycled back into future Fingerphones.

In some products, such as grocery bags, plastic compares favorably to paper in terms of environmental impact and production energy budgets. Paper has the advantage in musical instrument s such as the Fingerphone of providing a medium to inscribe multiple functions—a plurifunctionality difficult to achieve with plastics or metals. These functions include: visual and tactile fiducials for the performer, highly conductive and insulating regions for the playing surface, a membrane for the bending wave sound transducer and an absorbent and thermally insulating substrate for connections and support of the micro-controller and output transducer. This plurifunctionality is found in traditional fretted chordophones: frets serve as fiducials, to define the length of the sounding string, as a fulcrum for tension modulation of the string and as an anvil to transfer energy to the string in the "hammer on" gesture.

Capacitive sensing of the performer's digits obviates the need for the Stylophone's metal wand and connecting wire entirely. Employing a distributed-mode driver eliminates the need for a loudspeaker cone and metal frame. In this way the entire instrument surface can be used as an efficient radiator.

The prototype of Figure 2 uses a small, readily available printed circuit board for the Atmel micro-controller; the production version would instead use the common "chip on board" technique observable as a black patch of epoxy on the Stylophone oscillator board, on cheap calculators and other high volume consumer products. This technique has been successfully used already for paper and fiber substrates as in Figure 5 [7].

Figure 5: Chip on Fabric

In conventional electronic design the cost of simple parts such as resistors and capacitors is considered to be negligible; laptop computers, for example, employ hundreds of these discrete surface mounted parts. This traditional engineering focus on acquisition cost from high volume manufacturers doesn’t include the lifecycle costs and, in particular, ignores the impact of using such parts on the ability for users to eventually recycle or dispose of the devices. Rather than use a conventional cost rationale the Fingerphone design was driven by the question: how can each of these discrete components be eliminated entirely? For example, Atmel provides a software library and guide for capacitance sensing. Their design uses a discrete resistor and capacitor for each sensor channel. The Fingerphone uses no external resistors or capacitors. The built-in pull-up resistors of each I/O pin are used instead in conjunction with the ambient capacitance measured between each key and its surrounding keys.

The Stylophone has a switch to engage a fixed frequency and fixed depth vibrato, and rotary potentiometers to adjust pitch and volume. These functions are controlled on the Fingerphone using an origami piezoresistive sensor and linear paper potentiometers. The former is a folded strip of paper using a flattened thumb knot that forms a pentagon (Figure 6). Notice that 3 connections are made to this structure eliminating the need for a pull up resistor and establishing a ratiometric measure of applied pressure.

Figure 6: Origami Force Sensor

The remaining discrete components on the micro-controller board can be eliminated in a production version: The LED and its series resistor are used for debugging—a function easily replaced using sound [8]. The micro-controller can be configured to not require either a pull-up resistor or reset button and to use an internal RC clock instead of an external crystal or ceramic resonator. This RC clock is not as accurate as the usual alternatives but certainly is as stable as the Stylophone oscillator. This leaves just the micro-controller’s decoupling capacitor.

The magnet of the sound transducer shown in Figure 2 is one of the highest energy-cost devices in the design. A production version would use a piezo/ceramic transducer instead. These have the advantage of being relatively thin (1-4mm) and are now commonly used in cellphones and similar portable devices because they don't create magnetic fields that might interfere with the compasses now used in portable electronics. By controlling the shape of the conductive paper connections to a piezo/ceramic transducer a low-pass filter can be tuned to attenuate high frequency aliasing noise from the class D amplifier.

Reuse

Instead of the dedicated battery compartment of the Stylophone, the Fingerphone has a USB mini connector so that an external, reusable source of power can be connected — one that is likely to be shared among several devices, e.g, cameras, cellphones, or laptop computers. Rechargable, emergency chargers for cellphones that use rechargeable lithium batteries and a charging circuit are a good alternative to a disposable battery (Figure 7).

Figure 7: Reusable Power Sources

This approach of providing modular power sources shared between multiple devices may be found in modern power tool rechargeable battery packs, and in the Home Motor of 1916. This was available from the Sears mail order catalog with attachments for sewing, buffing, grinding, and sexual stimulation [9].

The Fingerphone components are installed on a light, stiff substrate to provide a resonating surface for the bending mode transducers. This has been found to be a good opportunity for reuse so prototype Fingerphones have been built on the lid of a pizza box, a cigar box, and a sonic greeting card from Hallmark - all of which would normally be discarded after their first use. Such reuse has precedent in musical-instrument building, e.g., the cajon (cod-fish shipping crates), the steel-pan (oil drums), and ukulele (cigar boxes).

2.3 Recycle

The bulk of the Fingerphone is recyclable, compostable paper. A ring of perforations in the paper around the micro-controller would facilitate separation of the small non-recyclable component from the recyclable paper.

Use Maximization

Introduction

The Stylophone has a single, strident, sawtooth-wave timbre. There is no control over the amplitude envelope of the sawtooth wave other than to turn it off. This guarantees (as with the kazoo, harmonica, and vuvuzela) that the instrument will be noticed – an important aspect of the gift exchange ritual usually associated with the instrument. This combination of a constrained timbre and dynamic envelope presents interesting orchestration challenges. These have been addressed by David Bowie and Little Boots in different ways: In early recordings of "Space Oddity" the Stylophone is mostly masked by rich orchestrations––in much the way the string section of an orchestra balances the more strident woodwinds such as the oboe. Little Boots’ "Meddle" begins by announcing the song's core ostinato figure, the hocketing of four staccato "call" notes on the Stylophone with "responding" licks played on the piano. The lengths of call and response are carefully balanced so that the relatively mellow instrument, the piano, is given more time than the Stylophone.

3.2 Timbre

The oscillators of the Fingerphone compute a digital phasor using 24-bit arithmetic and index tables that include sine and triangle waves. The phasor can also be output directly or appropriately clipped to yield approximations to sawtooth and square/pulse waves respectively. Sufficient memory is available for custom waveshapes or granular synthesis. The result is greater pitch precision and more timbral options than the Stylophone.

Dynamics

An envelope function, shaped according to the touch expressivity afforded by electric field sensing, modulates the oscillator outputs of the Fingerphone. The level of dynamic control achieved is comparable to the nine "waterfall" key contacts of the Hammond B3 organ.

Legato playing is an important musical function and it requires control of note dynamics. The audible on/off clicks of the Stylophone disrupt legato to such an extent that the primary technique for melodic playing of the instrument is to rapidly slide the stylus over the keys to create a perceived blurring between melody notes. The dedicated performer with a steady hand can exploit a narrow horizontal path half way down the Stylophone stylus-board to achieve a chromatic run rather than the easier diatonic run

Legato in the Fingerphone is facilitated by duophony so that notes can actually overlap––as in traditional keyboard performance. Full, multi-voice polyphony is also possible with a faster micro-controller or by taking advantage of remote synthesis resources driven by the OSC and MIDI streams flowing from the Fingerphone’s USB port.

Manual Layouts

Figure 8: Trills

Surface interaction interfaces provide fundamentally different affordances to those of sprung or weighted action keyboards. In particular it is slower and harder to control release gestures on surfaces because they don’t provide the stored energy of a key to accelerate and preload the release gesture. This factor and the ease of experimentation with paper suggest a fruitful design space to explore: new surface layout designs. The layout illustrated in Figure 4 resulted from experiments with elliptical surface sliding gestures that were inspired by the way Dobro and lapstyle guitar players perform vibrato and trills. Various diatonic and chromatic ascending, descending and cyclical runs and trills can be performed by orienting, positioning and scaling these elliptical and back and forth sliding gestures on the surface.

3.5 Size Matters

By scaling the layout to comfortable finger size it is possible to play the white “keys" between the black ones–something that is impossible with the Stylophone layout.

The interesting thing about modulations of size in interactive systems is that continuous changes are experienced as qualitatively discrete, i.e., For each performer, certain layouts become too small to reliably play or too large to efficiently play. The economics of mass manufacturing interacts with this in a way that historically has narrowed the number of sizes of instruments that are made available. For example, the Jaranas of the Jarochos of Mexico are a chordophone that players build for themselves and their children. They are made “to measure” with extended families typically using seven or eight different sizes. The vast majority of manufactured guitars on the other hand are almost entirely “full size” with a few smaller sizes available for certain styles. This contrasting situation was also present with the hand-built fretless banjos of the 19th century now displaced by a few sizes of manufactured, fretted banjos.

In the case of the Stylophone the NRE (Non-recurring Engineering) costs for two molds and the circuit boards discourage the development of a range of sizes. There are also costs associated with the distribution and shelving in stores of different sizes. The lower cost structures of the Fingerphone on the other hand allow for a wider range of sizes. Prototypes have been developed by hand and with a cheap desktop plotter/cutter. Different scales can be experimented with in minutes instead of the hours required to develop circuit boards. Also, die cutting of paper is cheaper than injection molding or etching in production.

The use of a finger-size scale would appear to put the Fingerphone at a portability disadvantage with respect to the Stylophone. It turns out that fabric and paper allow for folded Fingerphones that are no larger than the Stylophone for transport. Roll-up computer keyboards and digitizing tablets are precedents for this approach.

Discussion

Impact

By itself the Fingerphone will not have a significant direct impact on the sustainability issues the world faces. However, now that musical instrument building is being integrated as standard exercises in design school classes, the Fingerphone can serve as a strong signal that more environmentally responsible materials and design techniques are available.

Design Theory

Simondon’s thesis on the technical object [0] describes the value of plurifunctionality to avoid the pitfalls of “hypertelic and maladapted designs”. Judging by the number of huge catalogs of millions of highly functionally-specific electronic parts now available, the implications of Simondon’s philosophical study were largely ignored. The Fingerphone illustrates how plurifunctionality provides designers with an alternative route to economies of scale than the usual high-volume-manufacturing one where the cost of development is amortized over a large number of inscribed functions instead of a large number of high volume parts.

Transitional Instruments

The Fingerphone adds to a debate in the NIME community about accessibility, ease of use and virtuosity. Wessel and Wright declare that it is possible to build instruments with a low entry point and no ceiling on virtuosity [11]. Blaine and Fels argue that this consideration is irrelevant to casual users of collaborative instruments [12]. Isn’t there a neglected space in between of transitional instruments that serve people on a journey as they acquire musical skills and experience? Acoustic instrument examples include the melodica, ukulele and recorder. The Stylophone, in common with Guitar hero and Paper Jamz, is designed with a primary focus on social signaling of musical performance. The Fingerphone shows that affordable instruments may be designed that both call attention to the performer and also afford the exercise and development of musical skills, and a facilitated transition to other instruments.

ACKNOWLEDGMENTS

Thanks for support from Pixar/Disney, Meyer Sound Labs, Nathalie Dumont from the Concordia University School of Fine Arts and the Canada Grand project.

REFERENCES

[1] S.

Capps, "Toy Musical Instrument Design,", Personal Communication, Menlo Park, 2011.

[8] A.

Turing, Manual for the Ferranti Mk. I:

University of Manchester, 1951.

[10] G.

Simondon, Du mode d'existence des objets

techniques. Paris: Aubier-Montaigne, 1958.

David Wessel’s Slabs: a case study in Preventative Digital Musical Instrument Conservation

Mon, 01/15/2018 - 14:38 — AdrianFreedAudio Sine and Square Wave Signal Generator using a Wavetable

Wed, 02/24/2010 - 00:09 — AdrianFreedI discovered by accident that some folk from Greece published the same ideas in a professional technical journal in 1989 (attached). If you are a scholar of such things it is interesting to compare and contrast the modes of articulation in my vernacular engineering approach with those of the academic paper.

As I review this design from the 1970's I am struck by the exotic mixture of TTL/CMOS logic/CMOS switches, transistors and opamps that were required to pull it off. Although it might seem that such techniques are obsolete, I have seen plenty of recent Arduino designs using resistor networks that have faced the same challenge of creating a function with positive and negative values from binary sources.

Q: How would I do it differently today? A: I would replace the 555 timer and TTL parts for an Arduino and use a rail to rail inverting opamp.

Interestingly the parts required are all still available and the Arduino solution would cost about the same. The main cost difference would probably be from the the two-pole multiway switches which are expensive these days.